"PS9" (PS9)

"PS9" (PS9)

02/14/2019 at 17:55 ē Filed to: None

0

0

16

16

"PS9" (PS9)

"PS9" (PS9)

02/14/2019 at 17:55 ē Filed to: None |  0 0

|  16 16 |

!!!error: Indecipherable SUB-paragraph formatting!!! You have no business charging more than a thousand for a card with less than 16 GB of VRAM on it and the 3/6/11 SKU designs are older than the bowling green massacre and NEED TO DIE.

4/8/16 for the next refresh or GTFAC. If the next titan costs $3k or so, $1.5k of that better be for ram, or someoneís getting some samsung GDDR5 modules installed somewhere unpleasent.

For Sweden

> PS9

For Sweden

> PS9

02/14/2019 at 18:12 |

|

Why VRAM when you can IRAM?

Nibby

> PS9

Nibby

> PS9

02/14/2019 at 18:19 |

|

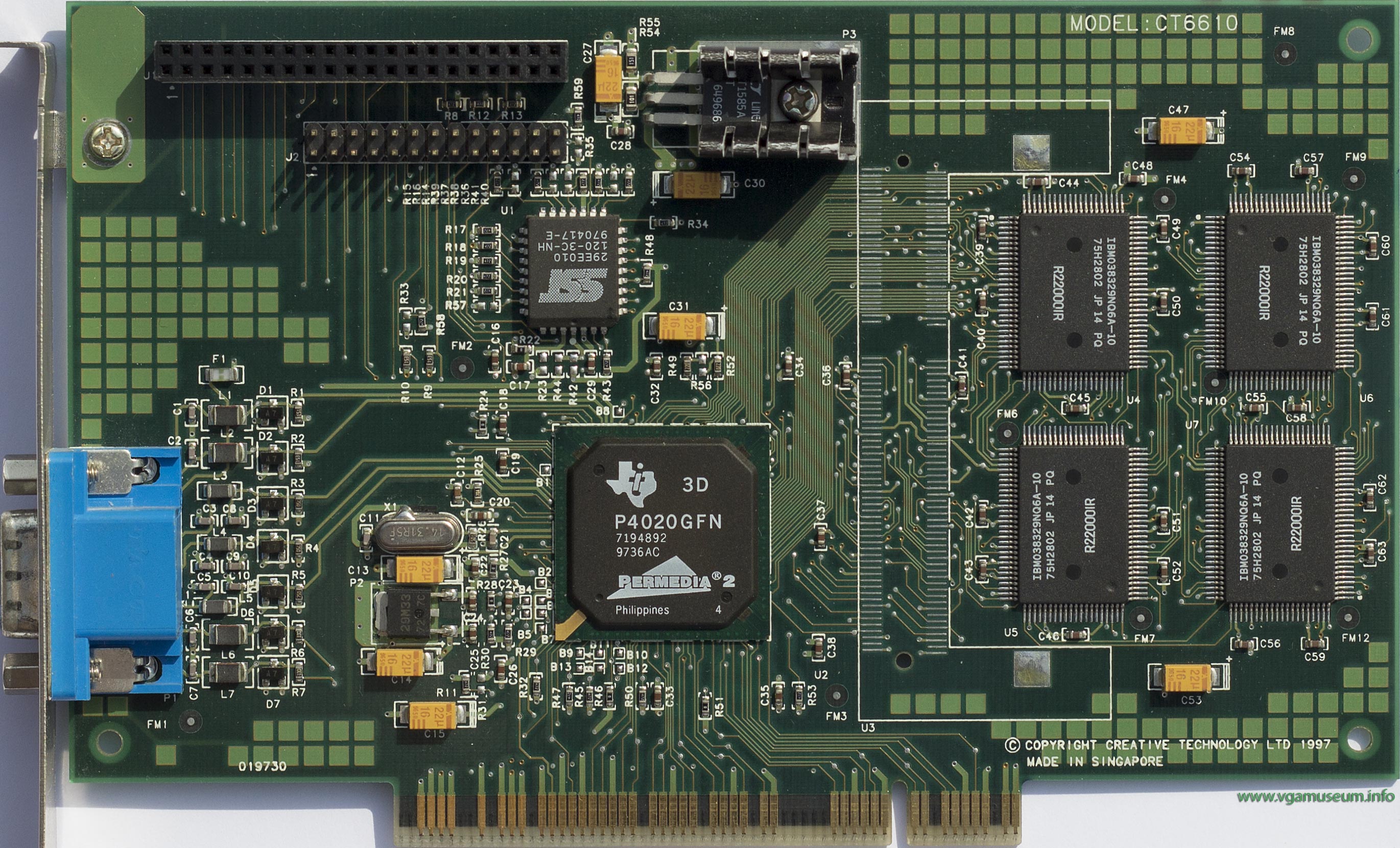

y u no S3 ViRGE

lone_liberal

> PS9

lone_liberal

> PS9

02/14/2019 at 18:28 |

|

$3k for a video card? I can get a crate engine for that much!

BaconSandwich is tasty.

> Nibby

BaconSandwich is tasty.

> Nibby

02/14/2019 at 18:29 |

|

Riva TNT2.

Spamfeller Loves Nazi Clicks

> Nibby

Spamfeller Loves Nazi Clicks

> Nibby

02/14/2019 at 18:54 |

|

Because

My X-type is too a real Jaguar

> PS9

My X-type is too a real Jaguar

> PS9

02/14/2019 at 19:13 |

|

Radeon FTW

Tekamul

> PS9

Tekamul

> PS9

02/14/2019 at 19:36 |

|

Wait, are they still just on GDDR5? Honestly, I don't buy graphics cards, but I worked for Infineon a million years ago when GDDR5 was new. Nothing better down the pipe? I understand your displeasure.

Echo51

> Tekamul

Echo51

> Tekamul

02/14/2019 at 19:38 |

|

They have HBM but why throw money at new stuff when consumers will eat the old?

Tekamul

> Echo51

Tekamul

> Echo51

02/14/2019 at 20:02 |

|

HBM yield is still trash though, making it too expensive. I really hoped TSV alignment would be better by now.

PS9

> Echo51

PS9

> Echo51

02/14/2019 at 22:57 |

|

HBM (and the interposer you need to make it work) are rough on yields. Volume is a problem also, which is why all HBM cards new are pricey and in limited supply out of the gate. nVidia is not wrong for putting off migration to HBM in the mainstream space for as long as they can given that.

They are still super-wrong for trying to sell us 3GB cards in 2019. COUGH UP THE DAMN VRAM ALREADY NVIDIA

PS9

> Tekamul

PS9

> Tekamul

02/14/2019 at 22:59 |

|

There are things way better than GDDR5 but I'm not gonna waste the good stuff. Its not gonna be memorizing anything anyway given where it's going.†

PS9

> Nibby

PS9

> Nibby

02/14/2019 at 23:00 |

|

Why you hurt me with tragic memories of my pc gaming past...†

Josh - the lost soldier

> PS9

Josh - the lost soldier

> PS9

02/14/2019 at 23:10 |

|

16 GB of HBM sounds like SEX to me also, but Iíve been gaming on the GTX 1070 8GB GDDR5 with no issues. Not sure if 16 GB is needed just yet. Still, Radeon VII is going to be a beast in memory!

PS9

> Josh - the lost soldier

PS9

> Josh - the lost soldier

02/15/2019 at 02:15 |

|

Screw need! nVidia jacks up the price like $1-200 every generation now and we dont even get more memory for it! The upcoming 1660 ti is rumored to be a $275 card with threeeeee GB if RAM??? They better not be thinking about charging $400 for the base card with only 3GB next time they launch a new architecture because they can git da fuk on up outta here wit dat!

404 - User No Longer Available

> My X-type is too a real Jaguar

404 - User No Longer Available

> My X-type is too a real Jaguar

02/15/2019 at 11:39 |

|

But AMD is the culprit here for all the high prices. I mean look at their recent products. RX 590 and Radeon VII are both complete jokes. Even the Vega 56/64 is borderline stupid, you have to tweak the card out of the box to undervolt it for it to get any good performance and acceptable power usage.

nVidia practically has no competition here.

PS9

> 404 - User No Longer Available

PS9

> 404 - User No Longer Available

02/15/2019 at 23:06 |

|

Perusing a review of the 590 , I see that it is faster than itís nearest competitor - the 1060 6GB - in every benchmark test across both the DX11 and DX12 suite. Most of the time itís a small gain of 10% or so, but in Shadow of War, the gap is 20 fps wide. That can mean the difference between a playable experience or not at this tier of GPU performance. The 1060 6GB is currently getting kicked out of the $300 tier due in part to the 2060 launch, and the 590 which for a short time offered superior performance for almost $100 less than the 1060 6GB.

As for the Radeon VII , Itís performance against the higher end RTX cards has been sporadic. But prior to itís launch, nVidia was the only option for those who wanted 4k60 fps from a single card. Itís also pretty great at some specific OpenCL applications . Itís tempting to brush that aside, but remember; the exponential growth of the GPU market worldwide is due in large part to people who had no use for PC gaming suddenly finding a usage case for performance they could only get from a discreet GPU.

Even the Vega 56/64 is borderline stupid, you have to tweak the card out of the box to undervolt it for it to get any good performance and acceptable power usage.

The Vega 64 doesnít have just Ďgoodí performance. It matches and in many cases, exceeds the performance of the RTX 2070 . Considering that the 2070 costs $100 more than the Vega, and the 2070 is a part of a newly launched architecture has the benefit of years of R&D the Vega does not as an already finished product, that performance is not merely Ďgoodí. It is excellent. That it is almost the equal of the 2070 for $100 less is one of the reasons Vega supplies have been dwindling and prices have been going up.

It is true that the Vega series are thirsty cards. 74 watts over the 2080 ti even, and a bit less than double the 2070. But Iím not really buying the idea that people who build what will be a $1500-2000 PC are going to be fretting much over that. It a ~300 watt GPU really a bridge to o far for a system with an 850W power supply and what could be a 12-16 core CPU in it? Vega 64s wouldnít be getting pricey and scarce post 2070 launch if that were true.